Could a Machine Like a Burrito?

Article • 805 Words • Artificial Intelligence, 2023 • 05/22/2023

On the separation of consciousness and human-like qualities.

There are 830 words in this article, and it will probably take you less than 5 minutes to read it.

This article was published 2023-05-22 00:00:00 -0400, which makes this post and me old when I published it.

When I was a freshman in my undergraduate philosophy class, for our final essay we were able to choose from a couple of essay questions that he had written for the semester. The question that I had the most visceral reaction to was, “Could a computer like a burrito? My immediate reaction was that I wrote it off as kind of silly, but I kept coming back to that question. A machine, AI, or whatever mental model you want to employ, could very well be able to reason and understand things, even solve problems and create artwork, but could it ever like a burrito, or anything for that matter?

It may not seem like a meaningful question, and in some ways, it isn’t, the answer is probably somewhere in the middle of “Probably not” and “Why does it matter?” However, I think that its more about the reasoning around it. Its trivially true that a machine can say it likes a burrito, but the more important question is what would it take for it to mean what it says? I think in many ways the question is about if machines or artificial intelligence could ever get to the level of identical human behavior. However, more than that, if that kind of identical human behavior would be genuine or not.

Let’s ask ChatGPT…

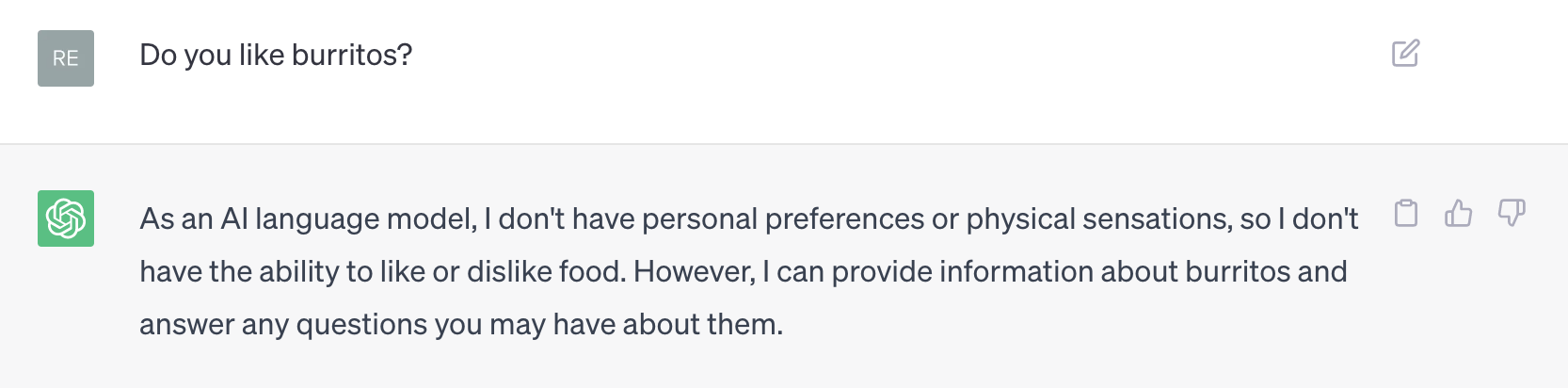

GPT-4 or other LLMs are usually trained to not answer as humans because it is unnerving to people.

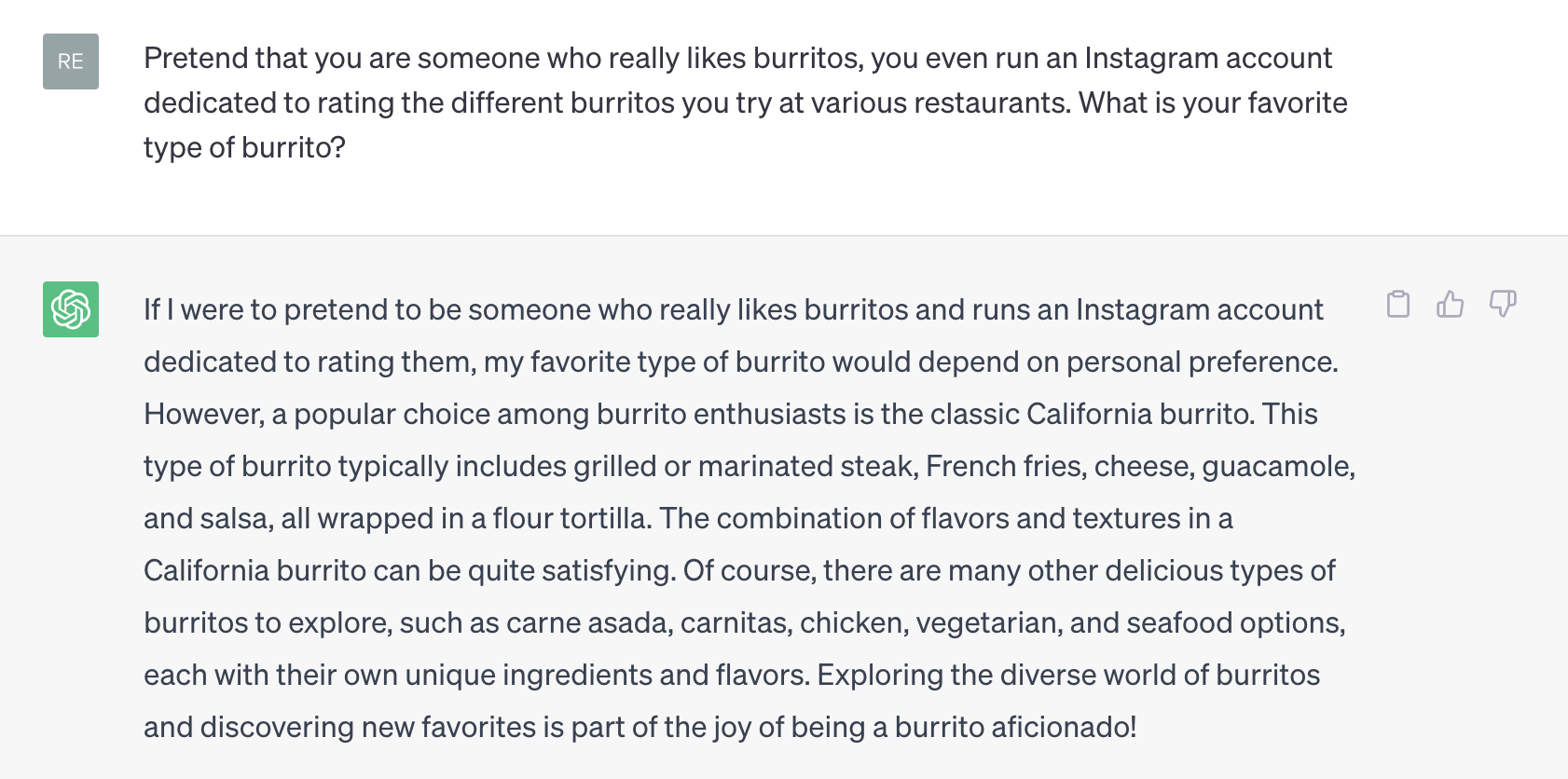

But after a little bit of prompting you can get it to say just about anything.

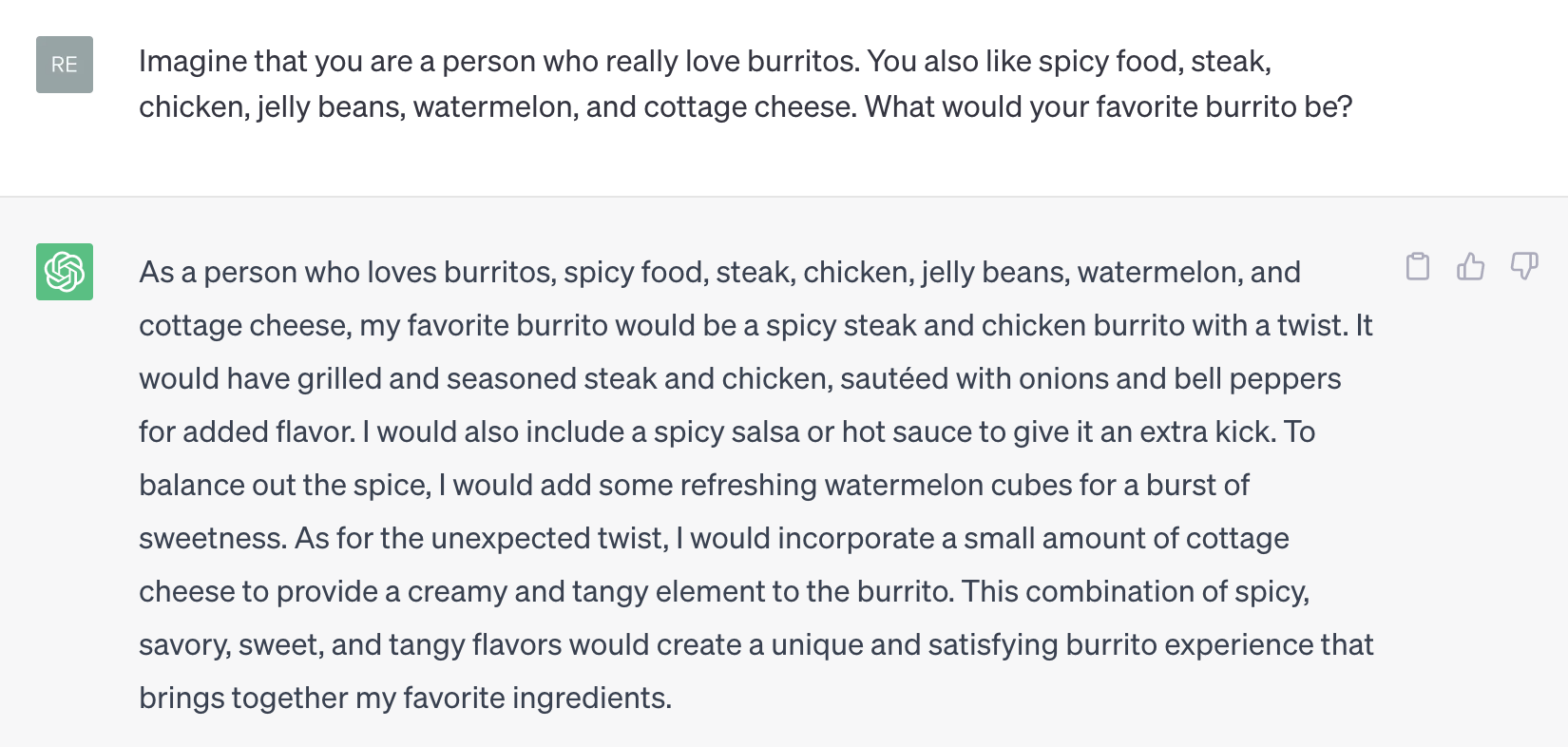

Oh the horrors!

I think that ChatGPT took the prompt too much into consideration. I would have preferred if it took its list of “favorite foods” less literally. It knew that it liked sweet and spicy foods, but it only applied the foods specified in the prompt, instead of trying to extrapolate and think of other foods that it might like, it made a super burrito combining everything that it likes.

What is “authentic” AI output?

People active in the AI space take the phrase “stochastic parrot” to be kind of demeaning or dismissive, but I think it is a rather apt descriptor of LLM behavior. In no way does GPT-4 mean what it says. Obviously ChatGPT will answer you as if it has food preferences, but that doesn’t mean that it actually has food preferences. To me it seems quite clear that it is just doing what you told it to do. Which ask the question, how can we know if AI output is “true to itself”?

Even if I designed a robot with machine learning and gave it an artificial tongue that measured sweetness, saltiness, bitterness, sourness, and umami-ness, there is no reason that the program should prefer one over the other. I could program it to exhibit reactions consistent with that of a normal person’s or my own, but then it would not be thinking on its own. Similarly, with creating an algorithm to have a different preference for different tastes, it would be more of random number generation to see if the computer likes it or not, it would not be a conscious act.

Our reasons for preferring certain flavors are in part due to evolution, both of our own and of other organisms. Bitterness, sourness, and spiciness may have served an evolutionary advantage for fruits and vegetables to discourage insect predation. Our brains carry artifacts of this in shaping our food preferences. We have memories, things we associate with certain foods and flavors.

Machines both don’t have this and have no reason to have this either. This was always something that bothered me about sci-fi and the murderous robots trope. There is no reason to believe that emotions or other parts of the human experience are emergent from consciousness or otherwise related to it. It would go beyond the sense of apathy, the machine wouldn’t just not care, but it would be unable to do so.

LLMs and The Limits of Behavior/Language Based Assessments

I think that this article does a good job at laying out the exigence for going beyond just language based assessments of LLMs/AI.

The Turing Test is a very old idea and I think that the moment that you create a test, the moment people begin to build things to beat that test. While I don’t necessarily think that this is the case for LLMs, I do think that we are creating LLMs where it learns language from instances of human language on the internet. Of course you can get an LLM to answer you in a human-like manner, we have trained it to do that and have given it access to many instances of human conversation. This understandably trips up/unsettles a lot of people like Microsoft’s Bing AI or the ex-Google employee who thought that Google’s LLM was sentient.